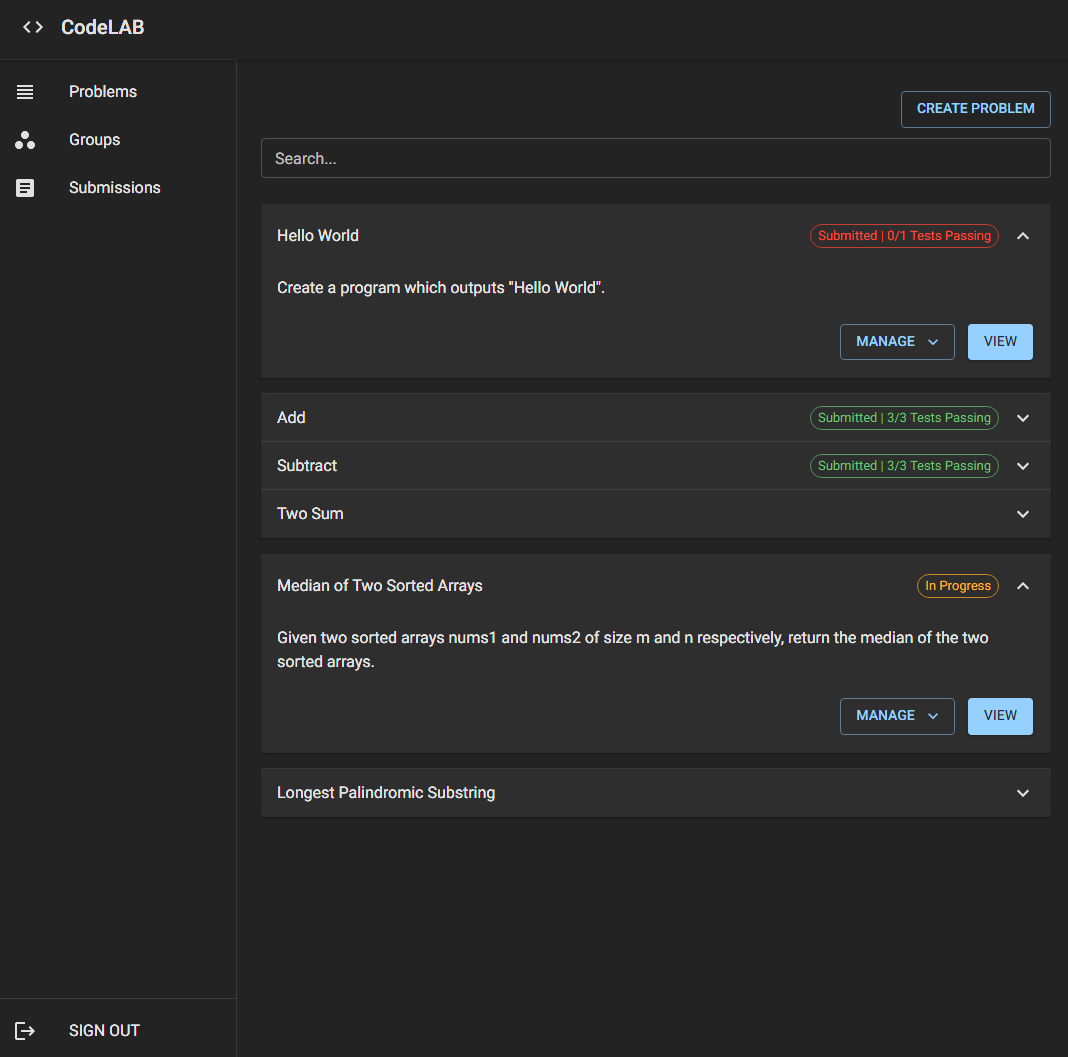

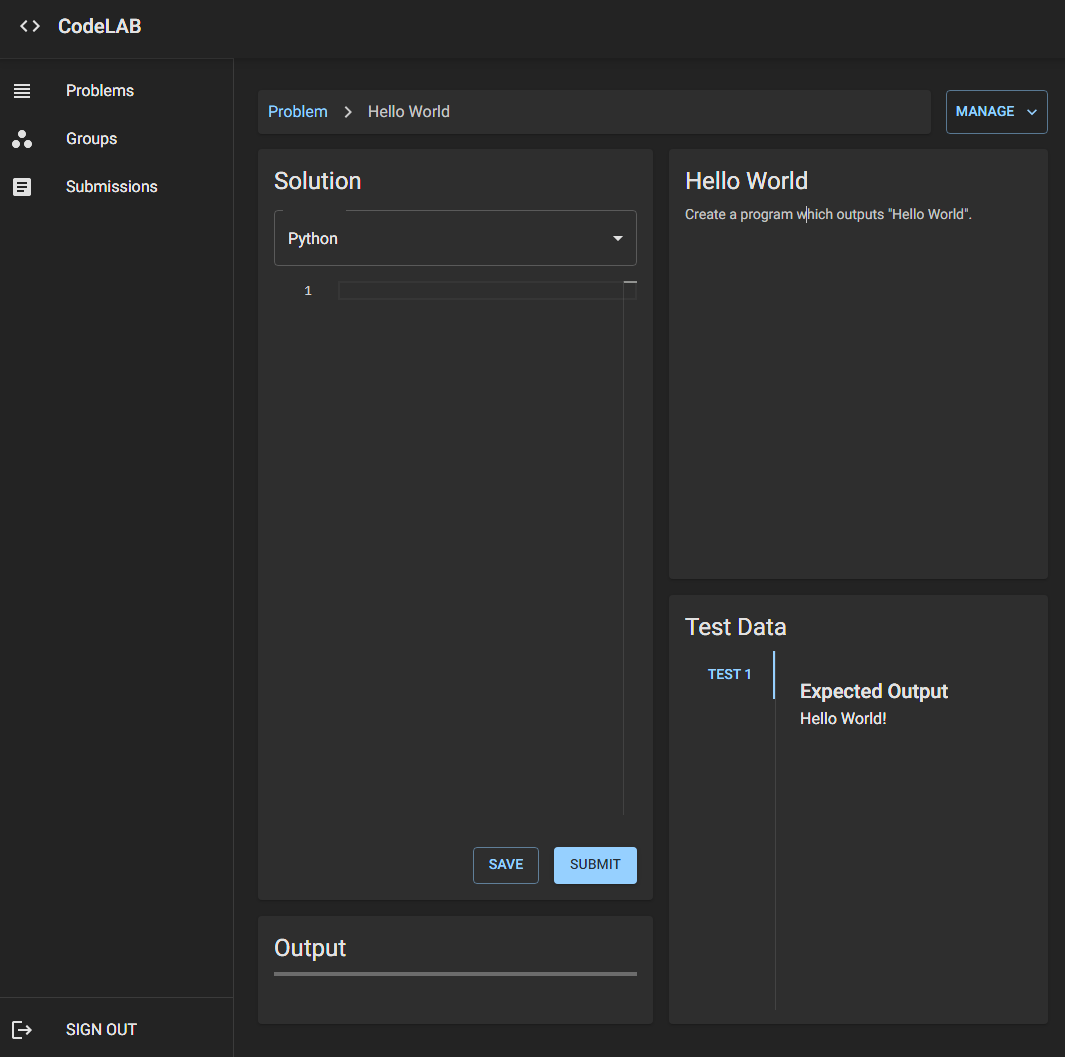

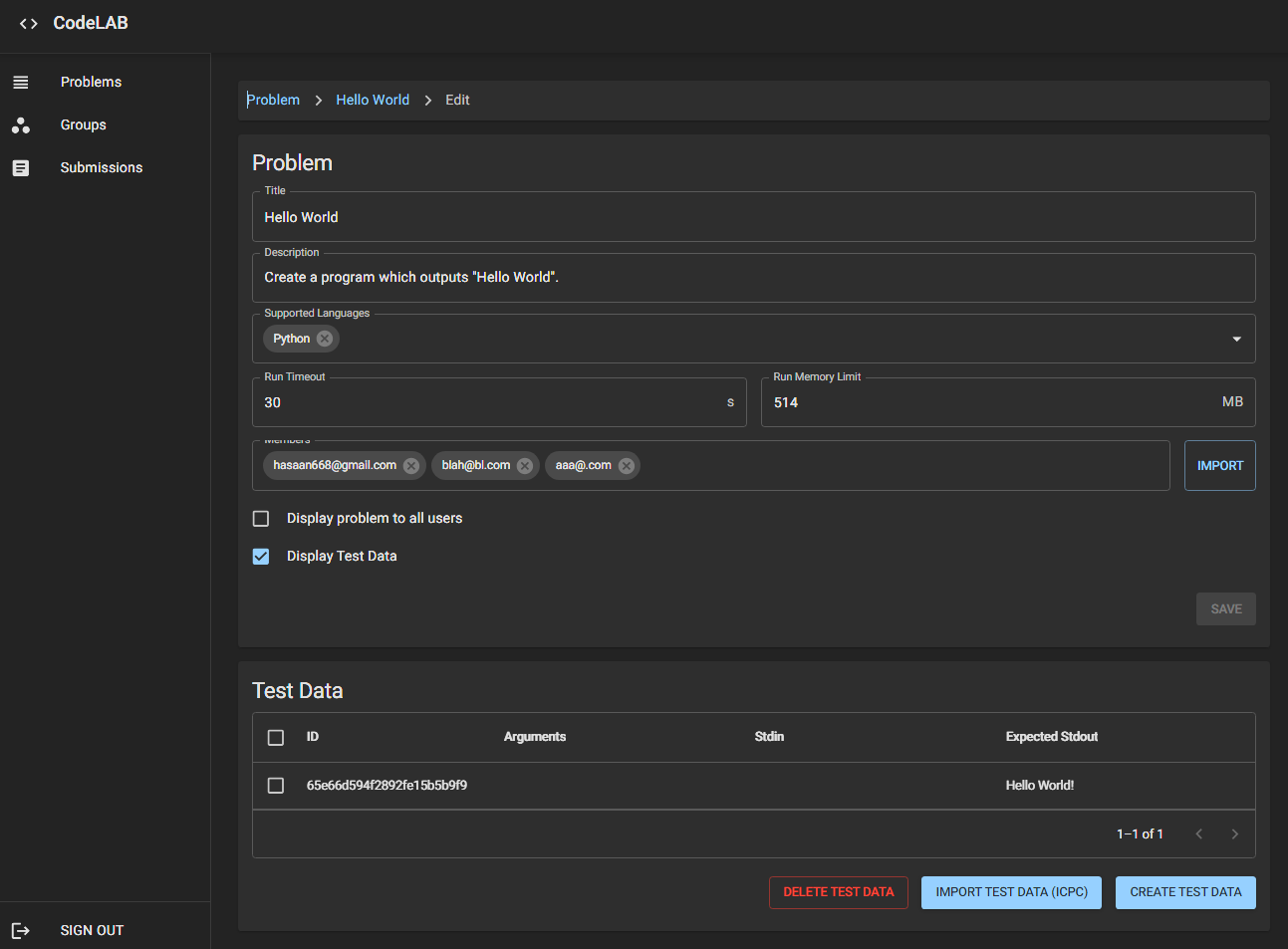

CodeLAB is an automated code assessment platform I designed and developed during my time at the University of Manchester. I created the platform to increase the efficiency of supervisors by elevating the burden of repetitive and tedious portions of the marking process. The application serves as an end-to-end platform, from creating assignments for students to validating submitted programmatic solutions against their expected outputs.

Architecture Choices

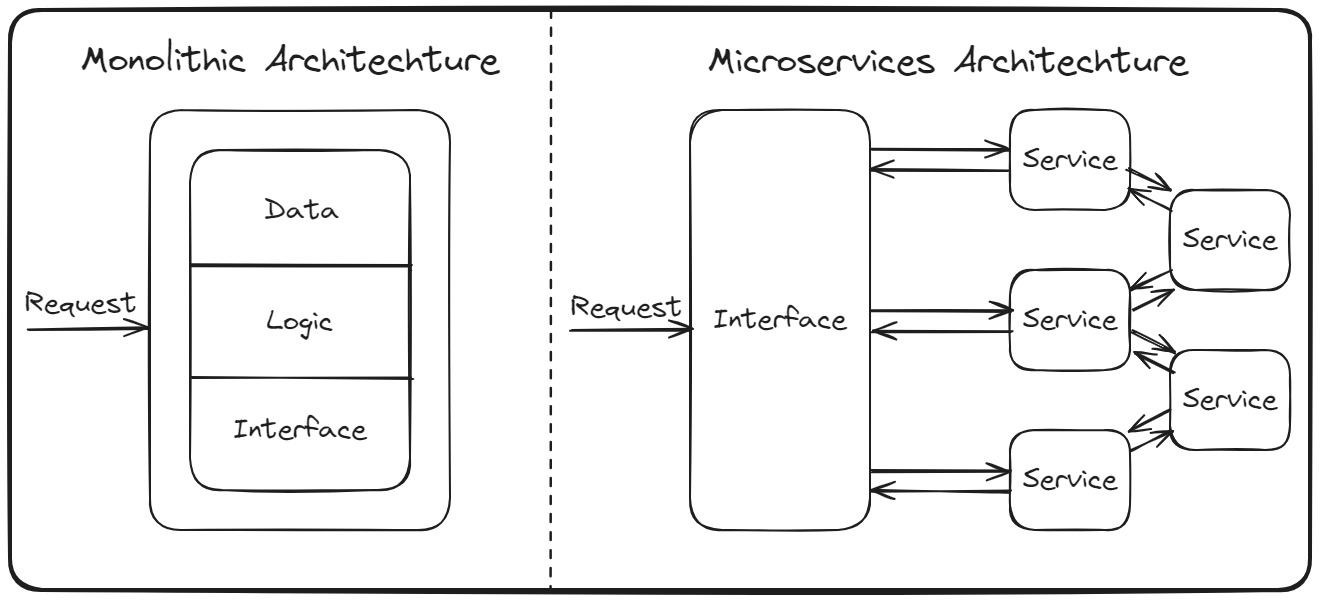

I opted to design the platform using a microservices architecture. This choice would help me to create an easily scalable and maintainable solution whilst also providing me with the technical experience of working with microservices. This approach did bring some challenges, notably in data management and service orchestration but these difficulties helped me to develop my skillset.

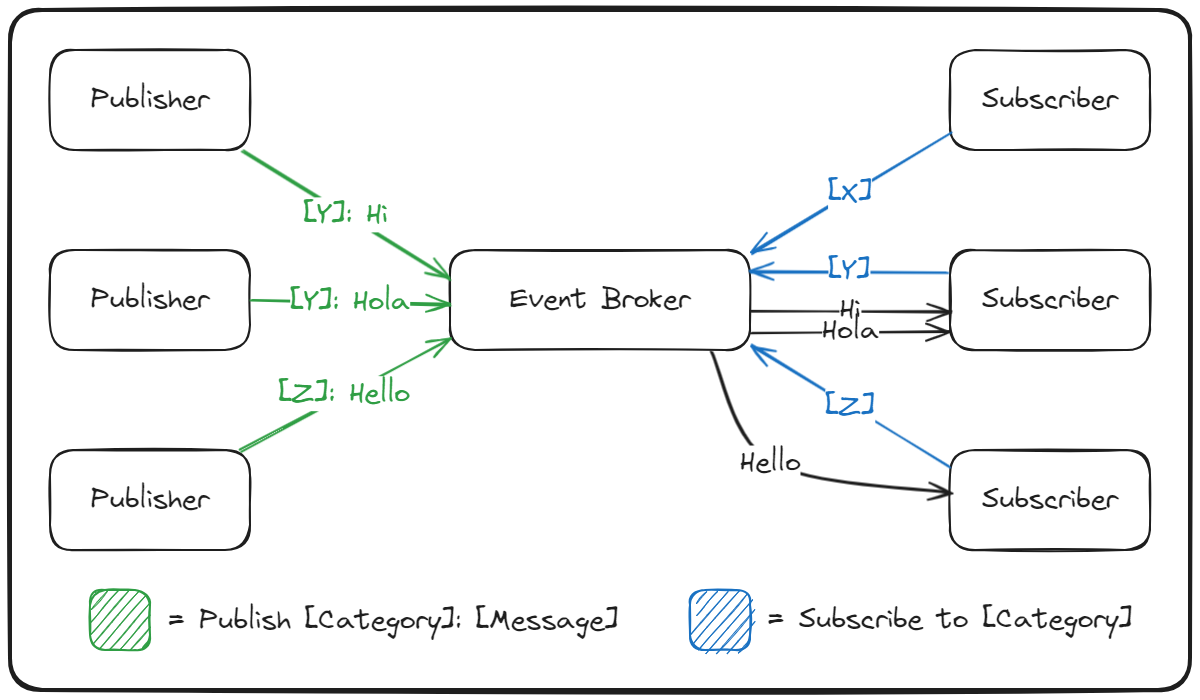

The solution necessitates a good UX and part of that is responsivity. However, when tests for code are being run it takes an undetermined amount of time. The publish-subscribe design pattern was leveraged to keep the user informed about any events relating to their submissions in real time.

Architechting CodeLAB

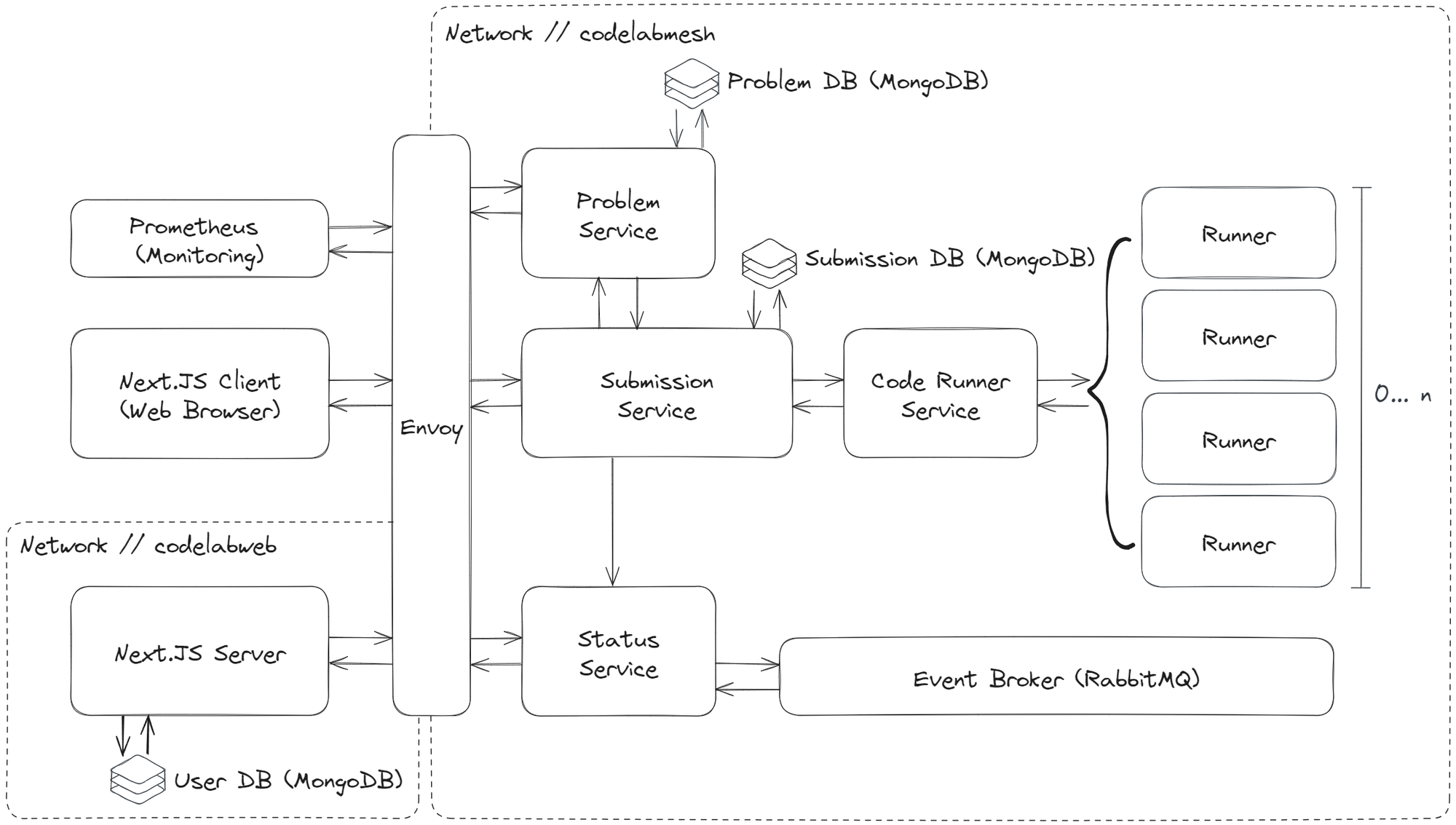

The back end has been segmented into four separate microservice containers: the problem service, the status service, the code runner service, and the submission service. The code runner can dynamically create new containers used short-term as runners. The problem and submission services persist data and are connected to independent instances of a MongoDB server. The status service is connected to a RabbitMQ server which is used as an event broker. All back-end microservice components are attached to a private network interface to prevent direct external access and required APIs are exposed through the Envoy API Gateway. Additionally, Prometheus is connected to the Envoy API Gateway for monitoring.

The Next.JS server handles SSR and any server-side operations for the front end. It is connected to its own MongoDB instance to store user data and is attached to a separate network interface to create a network boundary between the other services as they are not required to directly communicate. The Next.JS server is exposed through the Envoy API gateway.

The microservices within the system were designed to be stateless. This meant that any request would not depend on a request made before it. Designing the microservices to be stateless allows them to be replicated in deployments. Replicatable microservices are beneficial for deployment as they allow the system to be scaled easily. Moreover, it makes the system more reliable and performant as requests are spread across multiple instances of a service. This characteristic prevents a single service from becoming overloaded.

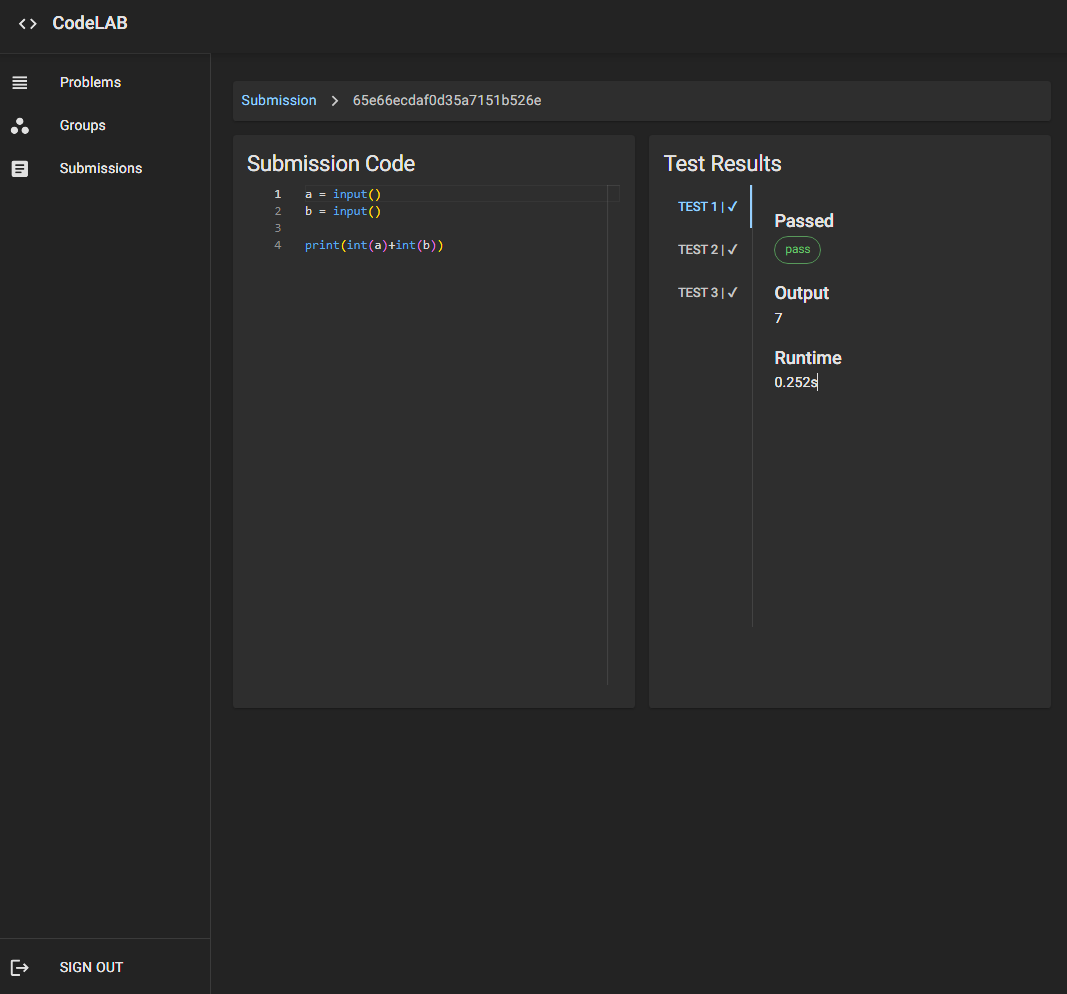

Running Tests

The code runner service handles the execution of arbitrary code and the evaluation of tests. Execution of arbitrary code on a system brings substantial security concerns. I decided to use a containerization technique leveraging Docker for the code runner service as it can efficiently and securely execute short-lived code. I used the Docker Python Software Development Kit (SDK) to dynamically build execution environments where code could be safely executed without affecting the host.

Docker Hub’s public repository of container images was used as a basis to create execution environments. Many existing container images created by organizations and the community are already available. Container images with the required dependencies for executing code in Python, Java, JavaScript, C, and Prolog were already available and used in the code runner service.

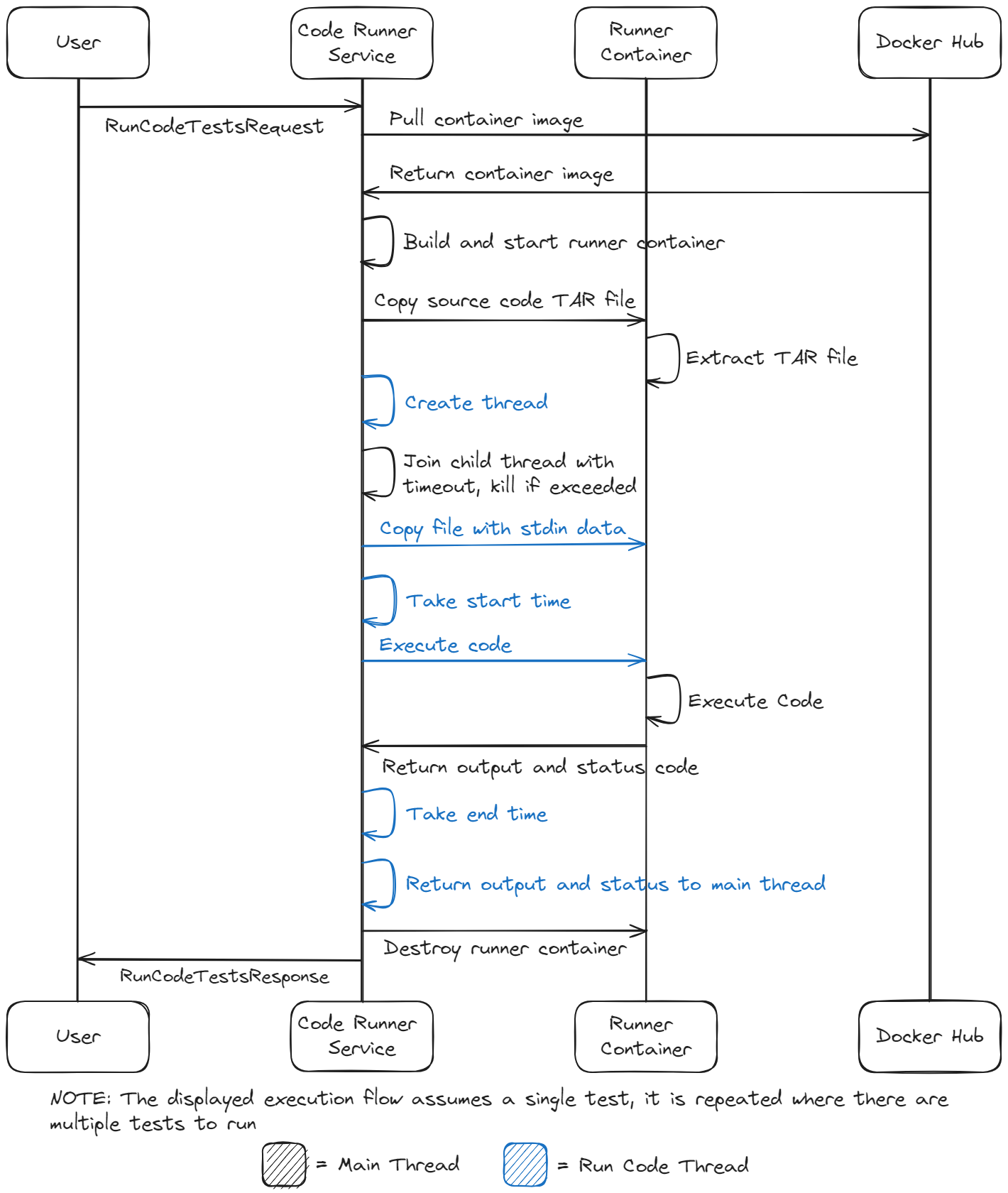

When the code runner service receives a RunCodeTests API call, a series of actions are triggered. The execution follows the following steps for each test:

- The Docker container for code execution is prepared.

- The container image is pulled from Docker Hub if unavailable in the system cache.

- The container is built and started.

- The source code files are packaged into a Tape Archive (TAR) file, which is then loaded into the container and extracted.

- A child thread is created to prepare for and execute the code within the container. The main thread joins the child thread with a timeout; if the timeout is exceeded, the container is forcefully killed, and nothing is returned. The child thread performs the following:

- A file containing text to pipe to stdin is loaded into the container.

- A starting timestamp is taken.

- The code is executed.

- An end timestamp is taken.

- The output from code execution and the exit code are returned to the main thread.

- The Docker container used for execution is destroyed.

- A response with the test output, runtime, and a pass/fail evaluation is returned.

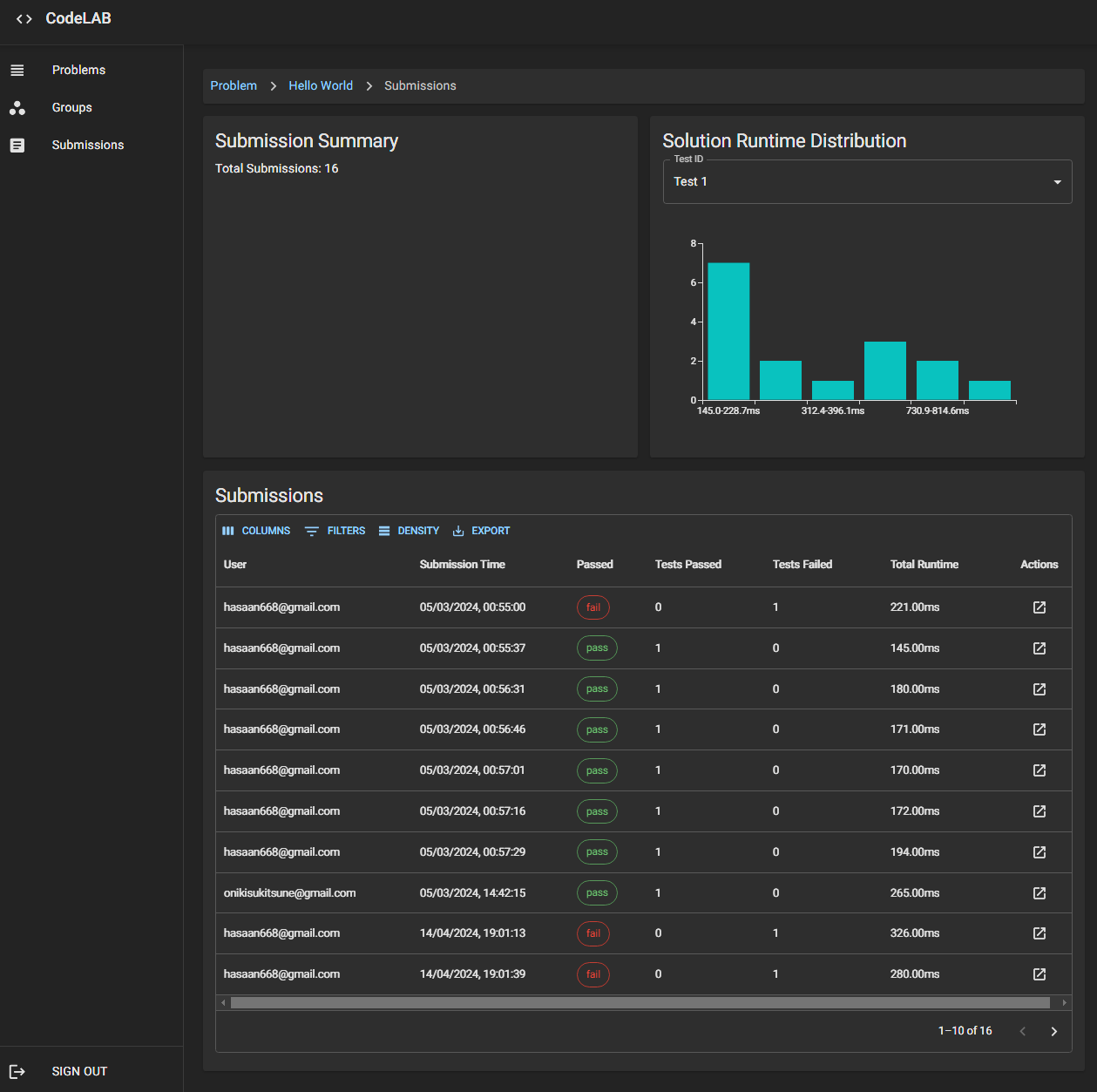

Processing User Submissions

The submission service handles orchestrating user submissions and managing submission progress. The service saves any user submissions that may be in progress, requests the code runner to test any completed submissions, and stores the results of any completed submissions. Data is stored in the submission services dedicated MongoDB, and queries are made using the pymongo Python library.

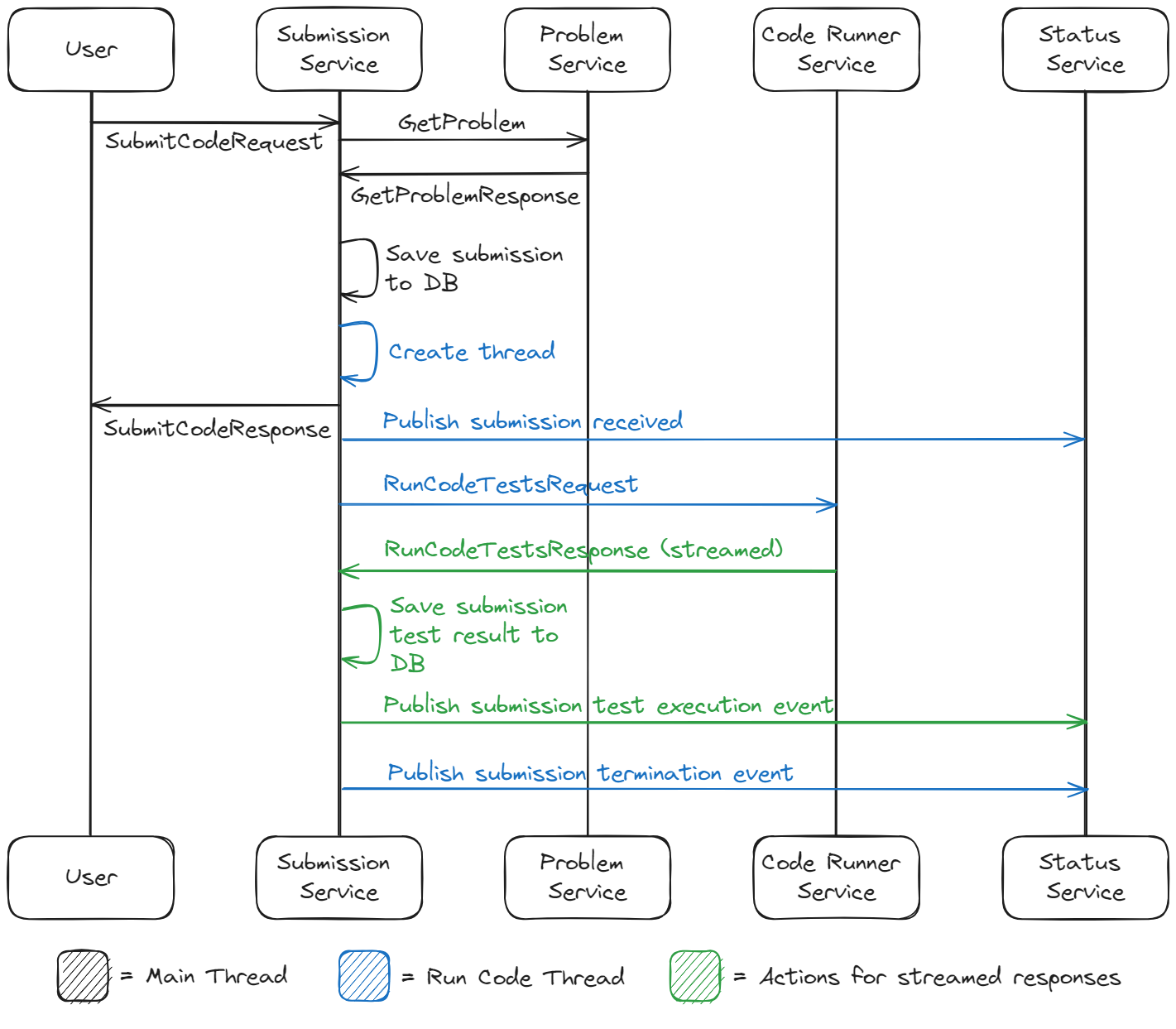

When the submission service receives a SubmitCode API call, a series of actions are triggered. The execution follows the following steps:

- A GetProblem request is made to the problem service for the data associated with the problem for which the submission was made, particularly the test data. The problem data has to be re-requested to maintain trust; the test data can not be sent with the submission request as it is made from the user’s client-side browser, meaning the user could have potentially manipulated the data.

- The submission is saved in the services MongoDB after being converted to JSON from its initial protobuf form.

- A new thread is created to handle making a request to the code runner for testing the submission. The tests could take a prolonged period of time; due to this, the decision was made to save the results asynchronously so that the submission service could return an instantaneous response.

- A unique identifier (ID) is generated for the submission and returned as a response, indicating that the submission has been saved and test execution will soon begin. The caller may use the submission ID to subscribe to the status service for events relating to the test execution progress.

- The following is handled within the thread that was created earlier:

- An event for the submission is published to the status service to notify that it has been received.

- A protobuf for the code runner RunCodeTests API call is created. The submission code and the test data are added to the request protobufs data.

- The RunCodeTests API call is made with the created request protobuf. The request returns a server-streaming response, meaning one request is made, but multiple responses are returned. The test execution stage is identified by a run stage parameter in the response. The run stage parameters value will indicate it contains results from test execution or that test execution is complete.

- If the response indicates it contains results from test execution:

- The test results are saved alongside the associated submission data in the submission services MongoDB.

- An event for the submission is published to the status service to notify that new test execution results have become available.

- If the response indicates test execution is complete:

- An event for the submission is published to the status service to notify that all tests for the submission have been completed. The event includes information about if the tests have either passed, failed, or timed out.

- A termination event for the submission is published to the status service as no more events will be made available.

Platform Screenshots